Google Takes the Lead

Since 2021 OpenAI has set the pace, year on year producing the best models. This year the momentum shifted. Gemini 2.5 and Claude-4 closed the gap with GPT-5 on capability and cost; Gemini 3.0 now vastly outperforms its competitors on most benchmarks, but largely in multimodal reasoning. OpenAI has gone from frontrunner to follower in a product cycle.

The irony is that this advantage does not come from a single clever trick. It flows from something far less glamorous: infrastructure. While rivals scrambled to hoard NVIDIA GPUs, Google quietly kept investing in its own Tensor Processing Units. The bespoke TPUv7 Ironwood that trained Gemini-3 sits on top of a stack that Google controls almost end to end: data centres, optical networking, compilers, serving systems and the consumer products that harvest data and revenue. Slow and steady wins the race; Google stuck to its strategy, avoided deep dependence on NVIDIA, and kept doing what it does best: everything.

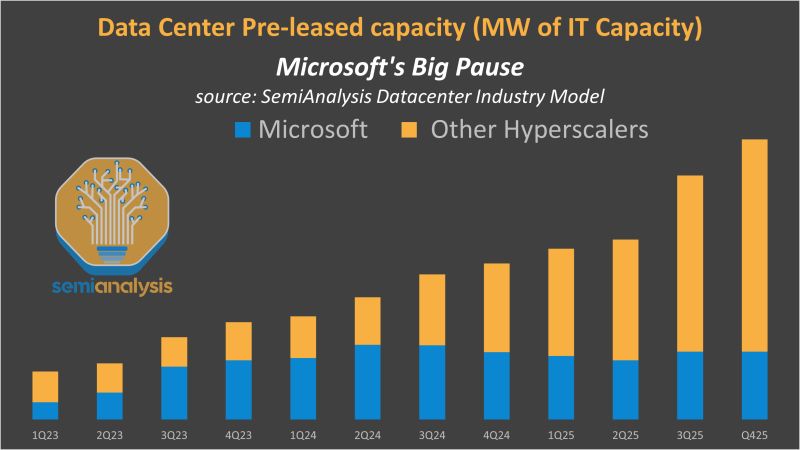

Microsoft (who own a quarter of OpenAI and provide most of the compute capacity) dropped the ball. After a burst of building in 2023, the firm abruptly paused new data centre leasing in mid-2024, walked away from multi-gigawatt projects and even let the flagship Stargate cluster for OpenAI slip to Oracle (graph below). The caution made sense to accountants who worried that bare-metal AI clusters would drag on Azure margins and concentrate revenue in a single customer. Strategically it ceded precious time.

OpenAI has also lost something less tangible. The foundational ideas behind large language models were mostly born inside Google Brain (now Google DeepMind). OpenAI were the first to engineer around it and rally the innovators. But nowadays, when burnt-out AI researchers cycle through the carousel of billion dollar offers from elite labs Google, Meta, OpenAI, Anthropic the place they most often return to is Mountain View. It’s simply a nicer place to work.

None of this means the race ends with a coronation. Gemini-3 may set records on leaderboards, yet it still hallucinates, still fabricates citations and still fails on some tasks that a competent graduate student could handle. Each new training run consumes more energy, more capital and more rare chips for increasingly marginal gains. The risk is that this contest turns into a race to the bottom on scale and price rather than a race toward reliability, because the current paradigm of ever larger language models may have hard limits on truthfulness.

Google today looks ahead in the AI race, backed by unmatched compute and a vertically integrated stack that finally works in its favour.

The question is whether everyone is sprinting toward a finishing line that leads into a wall.

24 November 2025

Measure How Much Productivity You Could Gain With Our Calculator

Our productivity calculator reveals the potential costs Traffyk can save your business and improve productivity by when inefficient workforce communication is reduced.