Why GPT-5 Fell Flat

- Scaling

- Hallucinations

- Cost of Compute

When an LLM model version is incremented by a whole integer the world expects something big. Especially when you have the CEO saying it’s a "PhD-level expert in your pocket”. If you haven’t caught up with the news, spoiler alert, GPT-5 is barely better than their previous models, and in some cases worse.

I want to examine why, and what it requires to get to a PhD level of expertise. Currently, the capabilities of state-of-the-art LLMs are an average of 50% success rate for a task requiring 1hr for a human to complete, for tasks that take minutes, the success rate is an average of 80%.

Think back on your career and ask yourself: What stage of your career were you at when solving problems that took minutes? Likely you’ll think of high school, undergraduate or even internship stages. When I think back to my PhD work, the minimum time to solve a problem was six months, some took more than a year. This is to say there is a significant jump from 1hr to 4,380hrs of problem solving.

If we believe scaling will conquer all, the big tech companies need to scale their systems over 4000x, at least, to reach PhD-level expertise.This brings me to hallucinations. What happens in those 20% to 50% of failures for minute and hour long tasks? Hallucinations. During training, these models are rewarded for accurate *sounding* answers, for questions they don’t know the answer to they make their answers as accurate *sounding* as possible even if it’s wrong. A lot of work by human researchers needs to be done to verify the correctness of these answers and minimise these hallucinations during training, it seems this was not done well for GPT-5. Even their release showcase was plagued by hallucinations (their bar charts turned into a meme). The whole thing screams rush job.

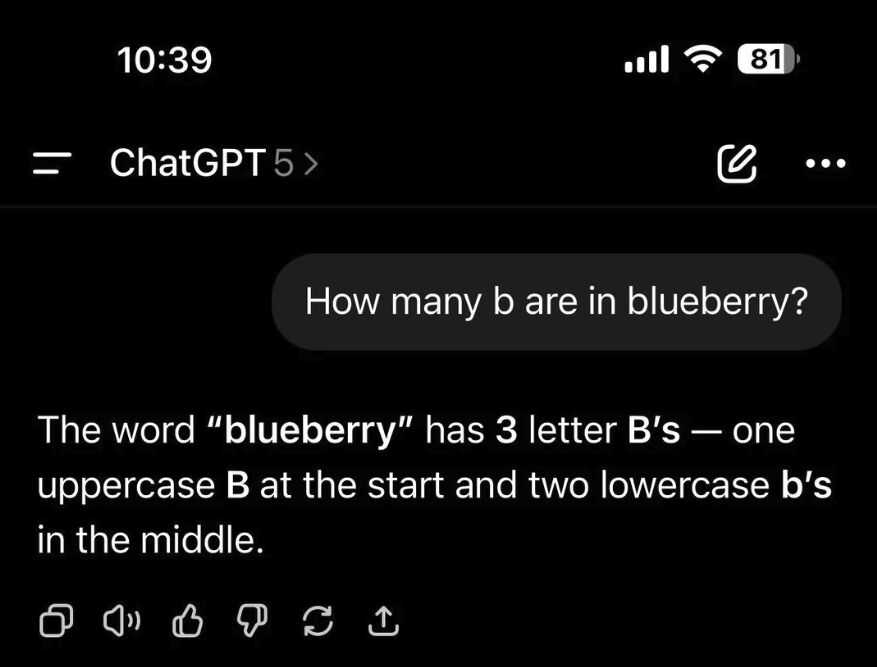

Finally, the nail in the coffin was OpenAI’s strategy to reduce compute costs. Coinciding with the release of GPT-5 they rolled up all of their models into their “model router”. This is a great way to save on compute costs as using a massive thinking model for a user who’s asking “how many b’s are there in blueberry” is probably not a good use of all those KWh of energy. The big mistake is that this was not communicated by OpenAI, and the router only showed GPT-5 as the model, so most users believed they were always using GPT-5 and their smaller models actually don’t know how many b’s there are in blueberry, leading a majority of people to believe there was a regression in model ability. OpenAI has since rolled back this change but made other changes to reduce their compute costs like rate and context limiting.

All in all, ignoring the hype and looking at results. The gains that are being made are diminishing and purse strings are tightening. Expect much longer times between meaningful releases, AI researchers need another ‘aha’ moment before big gains are made. My questions is whether this will come from the US or China next.

11 August 2025

Measure How Much Productivity You Could Gain With Our Calculator

Our productivity calculator reveals the potential costs Traffyk can save your business and improve productivity by when inefficient workforce communication is reduced.